Some Design thoughts

Duane Couchot-Vore

Content:

When you're building a web site, especially if you're crafting it all by hand, thousands of thoughts go through your head. Some of them are relatively trivial, such as, "This is a good place to use the JavaScript coalescing operator." But others have a more profound effect on the final product. Here are a few of the ones that got more cerebrum time.

Where's Tumbler?

My "Share" dropdown has icons for four social media sites: Facebook, Twitter, Linked-In, and Pinterest. This site doesn't exactly lend itself well to being shared on Pinterest, but you never know. So where are the others? In the HTML, commented out, are icons for five others, including Tumbler and SnapChat. The four that are online are the four that I found direct share links for. Although they have these direct links, the social media companies really, really want you to use their JavaScript APIs.

It's all about advertising revenue. You don't really think all those companies create social media sites for you convenience in sharing your innermost thoughts, do you? The approach is two-pronged: present advertising to you for a profit, and collect information about your interests and sell that to other advertisers for a profit. You have become the product and didn't even know it. I don't suppose the the inventors of the World-Wide Web at CERN forsaw it turning into the World-Wide Spy Network.

Truth be told, I like directed advertising. I'd much rather see ads for products I'm actually interested in, like single-board computers and the latest FPGA from Xilinx, instead of those for horse wax and stupid Android games. However, those tracking apps come at a price, none of which benefits anyone other than the social media trackers. To whit:

- They slow down page loading.

- They burden the visitor's CPU and RAM.

- And, I would feel compelled to throw out a pop-up warning to visitors that they are being tracked.

Had I included the APIs for the nine social media that I originally considered, that would be at least nine other destinations your browser would have to visit just to load the page. I say "at least" because who really knows what other chattering they're doing in the background? Nine isn't really that bad, I suppose. The record I actually measured was 118. You read that right. That page spawned out to 118 different destinations, and all I did was load it. And you wonder wonder why the Web is so slow!

So here's the bottom line. I'll add the other social media icons when one of the following conditions are met:

- I find direct links for them that don't involve installing their spyware.

- Visitors convince me to go ahead and use the APIs, spyware or not.

It Doesn't Work in Internet Explorer 5!

I'd wager that most modern web sites don't work right with IE5. The Web is a constantly evolving entity, with ever increasing capabilities. The XMLHTTPRequest object didn't exist in JavaScript until 2005. While it is possible, at least in some degree, to support old browsers, doing so can double or triple — or more — the amount of work required to build a site. I did think of using polyfills to handle some of the new features in older browsers, but then I could no longer say that this site was 100% my own code.

This aspect of design decision is all about targeting your audience. If I were trying to sell lace doilies to grandmothers still using Windows 95, I'd make my site as backward compatible as possible. But my target audience is people who are technologically savvy enough to be using an updated browser. It just wasn't worth another 100 hours to make the site work on every browser back to Netscape Navigator.

Swiper, no Swiping!

Or rather, Scraper, no scraping! Right along with trackers are scrapers, those nasty spiders that go around infiltrating web sites to find phone numbers and email addresses they can sell. Once again, you are the product. That's why there is no email address on this site in text form. There is a contact form that sends the email from my web server. No one nefarious need know what that email address is. There is, however, an email address and phone number in an image. It is possible these days to scan images looking for dirt, but I believe we're still at the point where doing so isn't fast enough to make it practical when you're search 50 million web sites.

Also on the contact form is a phone number. You see it in text, but it never exists that way in the source code. It's encoded. I hesitate to say encrypted, because it's not that fancy. Just fancy enough to keep scrapers from identifying it. It's theoretically possible for them to actually run the JavaScript and then scan through the DOM, but we're back to the principle of making it impractical. You can't stop the leeches completely, but you don't want to be the low-hanging fruit.

Paged Database Results

One of my sub-tasks while writing the blog portion was to look for any recent improvements to SQL to the tune of paging search results. Not really. The standard technique is to use the OFFSET and LIMIT keywords. That's quite reasonable for small result sets. "...OFFSET 240 LIMIT 20" is practical, barely a glitch. But for large result sets, something like "...OFFSET 242000 LIMIT 20" achieves the preposterous. The database will find 242020 results and throw away the first 242000. A mammoth waste of time and resources! On top of that, if records are being added and deleted by other processes, page boundaries are likely to change between requests. There are few things more maddening than the result that was just on the previous page now being on the next one.

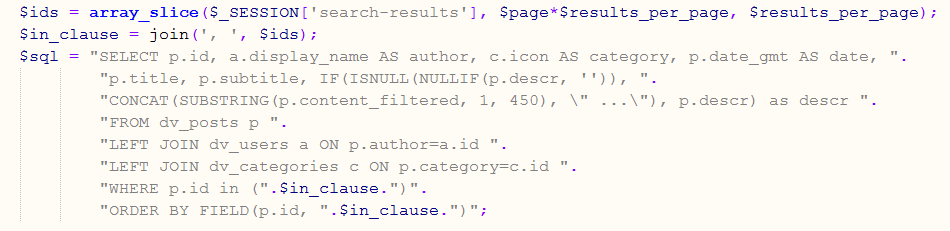

Then comes the recommendation to find another field that can be used as a key, like the timestamp on the last record retrieved, and search for later timestamps. That's fine if you're sorting by date and time, but in the general case doing that is a royal pain in the butt because there is rarely a field that works conveniently. What surprises me is that nowhere did I find the recommendation to do what I normally do, though people have asked about using this method. Just store the primary keys. It might not be practical to store 500000 results per session, but it is practical to save 500000 integers. And blazingly fast, maybe not in PHP, but if you're serving a system that large you would be using a CGI script written in something like C anyway. Page boundaries remain stable. The only gotcha is that if a record gets deleted, you might have a page with one less result than normal. So what? But remember the results probably won't be in the same order without the ORDER BY FIELD clause. See left.

I don't expect this blog to ever reach 500,000 posts, but I did it this way anyway, not just because I've always done it that way, but because it seems like the right thing to do.